下载 | Sentinel-5P下载代码测试

下载 | Sentinel-5P下载代码测试

ytkzSentinel-5P下载代码测试

介绍

Sentinel-5P任务旨在在 2017 年至至少 2023 年之间提供有关空气质量和气候的信息和服务。通过机载 TROPOMI 传感器,它每天对主要大气成分进行全球观测,包括臭氧、二氧化氮、二氧化硫、一氧化碳、甲烷、甲醛以及云和气溶胶特性。该任务旨在确保 Envisat 卫星退役和 NASA 的 Aura 任务与 Sentinel-5 发射之间的数据连续性

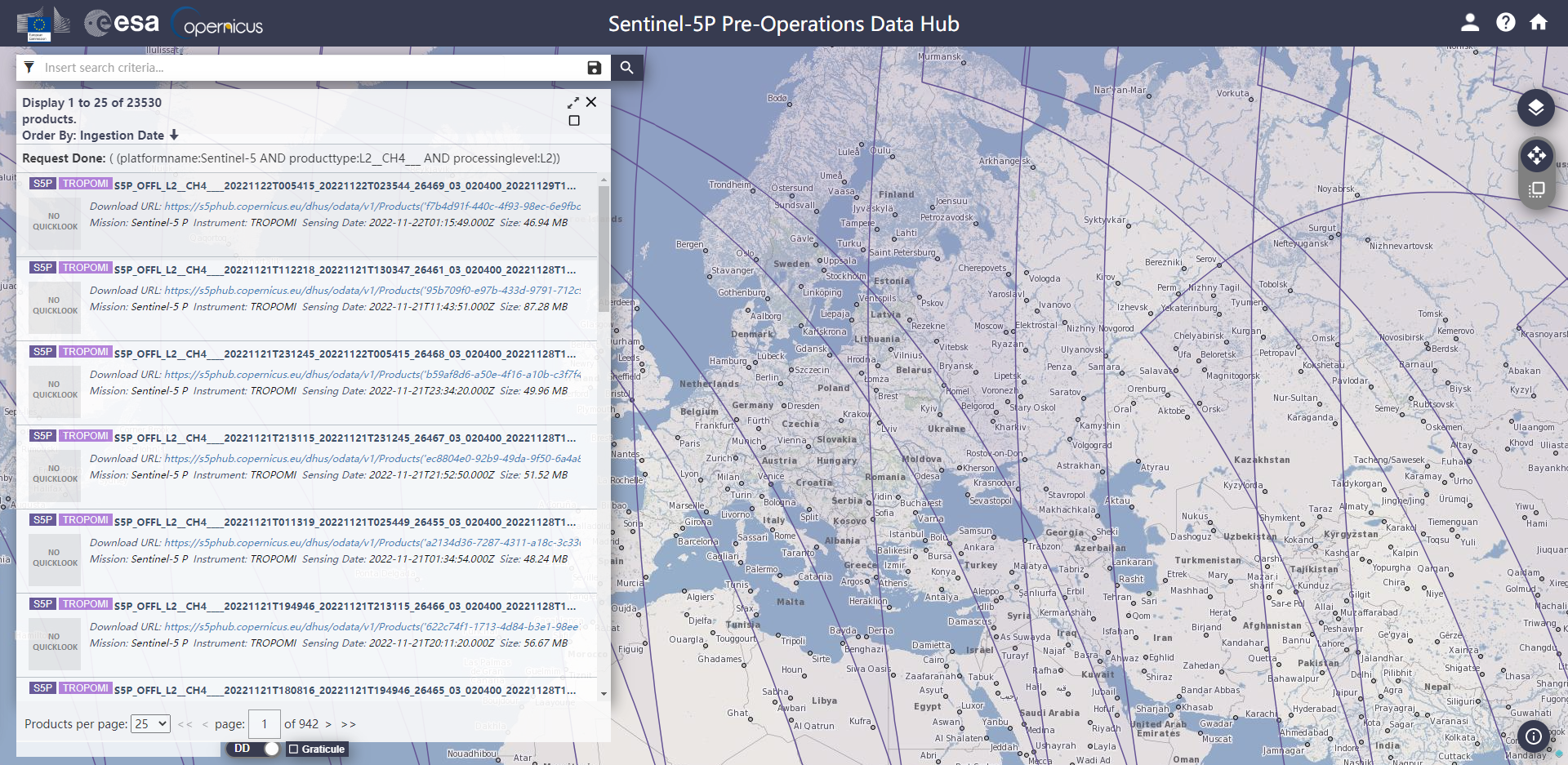

手动查询下载地址:

https://s5phub.copernicus.eu/dhus/#/home

官方提供一个账号下载S5P数据

account = 's5pguest'

password = 's5pguest'借此,我们尝试进行自动化下载。

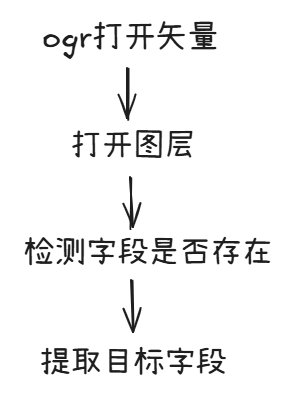

流程如下:

1.填写数据覆盖的范围

2.填写开始结束日期

3.查询每景影像对应的uuid

4.下载到指定的输出路径

Demo

自动下载DEMO如下:

#! usr/bin/env python

# -*- coding:utf-8 -*-

import datetime

import json

from requests import Session

from requests.auth import HTTPBasicAuth

import time

#Products constants

OZONE_TOTAL = 'L2__O3____'

OZONE_TROPOSPHIRIC = 'L2__O3_TCL'

OZONE_PROFILE = 'L2__O3__PR'

OZONE_TROPOSPHIRIC_PROFILE = 'L2__O3_TPR'

NITROGEN_DIOXIDE = 'L2__NO2___'

SULFAR_DIOXIDE = 'L2__SO2___'

CARBON_MONOXID = 'L2__CO____'

METHANE = 'L2__CH4___'

FORMALDEHYDE = 'L2__HCHO__'

proxies = {

"http": "http://127.0.0.1:7890",

"https": "http://127.0.0.1:7890",

}

def s5_quarry(days = '', wkt = '', product_type = '', ingestion_date_FROM = '', ingestion_date_TO = '', full_response = False ):

login = 's5pguest'

password = 's5pguest'

quarry = ''

#Setting up payload for auth

headers = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/39.0.2171.95 Safari/537.36'}

payload = {"login_username": 's5pguest',

"login_password": 's5pguest'}

#Auth

with Session() as s:

s.post('https://s5phub.copernicus.eu/dhus////login', data = payload, auth=HTTPBasicAuth(login, password), headers = headers, proxies =proxies)

# Performing quarry depending on parameters

#Quarring data for last X days

if days != '':

days = int(days) * -1

ingestion_date_TO = datetime.datetime.now()

ingestion_date_FROM = ingestion_date_TO + datetime.timedelta(days)

ingestion_date_TO = str(ingestion_date_TO.date())

ingestion_date_FROM = str(ingestion_date_FROM.date())

#Quaring data intersecting the WKT object

'''

https://s5phub.copernicus.eu/dhus/api/stub/products?filter=(%20footprint:%22Intersects(POLYGON((85.95280241129068%2043.18650535330309,119.36302793328738%2042.13659362743459,125.07417759516719%2035.46913658316771,119.07747045019343%2020.9348221528751,108.79740105880981%2020.13258590671309,83.0972275803508%2034.297939612206335,85.95280241129068%2043.18650535330309,85.95280241129068%2043.18650535330309)))%22)%20AND%20(%20beginPosition:[2021-07-28T00:00:00.000Z%20TO%202021-07-28T23:59:59.999Z]%20AND%20endPosition:[2021-07-28T00:00:00.000Z%20TO%202021-07-28T23:59:59.999Z]%20)%20AND%20(%20%20(platformname:Sentinel-5%20AND%20producttype:L2__NO2___%20AND%20processinglevel:L2%20AND%20processingmode:Near%20real%20time))&offset=0&limit=25&sortedby=ingestiondate&order=desc

'''

quarry = 'https://s5phub.copernicus.eu/dhus/api/stub/products?filter=(%20footprint:%22Intersects('+wkt+')%22)%20AND%20(%'+ \

'20beginPosition:['+ingestion_date_FROM +'T00:00:00.000Z%20TO%20' + \

ingestion_date_TO+ 'T23:59:59.999Z]%20' + \

'AND%20endPosition:[' +ingestion_date_FROM + 'T00:00:00.000Z%20TO%20' + \

ingestion_date_TO+ 'T23:59:59.999Z]%20)' + \

'%20AND%20(%20%20(platformname:Sentinel-5%20AND%20producttype:'+product_type + \

'%20AND%20processinglevel:L2%20AND%20processingmode:Near%20real%20time))&offset=0&limit=25&sortedby=ingestiondate&order=desc'

r = s.get(quarry, headers=headers, proxies=proxies)

if full_response == False:

import re

pat_ = re.compile("<uuid>" + '(.*?)' + "</uuid>", re.S)

uuidList = pat_.findall(r.text)

return uuidList

else:

return None

def download_product(uuid, output_path):

url = "https://s5phub.copernicus.eu/dhus/odata/v1/Products('%s')/$value"%uuid

# https://s5phub.copernicus.eu/dhus/odata/v1/Products('f7b4d91f-440c-4f93-98ec-6e9fbdcc206f')/$value

# https://s5phub.copernicus.eu/dhus/odata/v1/Products('0a4c2c0d-4e20-40cf-9e5b-608818db4d5d')/$value

# https://s5phub.copernicus.eu/dhus/odata/v1/Products(/'0e831d67-7b45-475a-b6dc-2e33a784f550/')/$value

# https://s5phub.copernicus.eu/dhus/odata/v1/Products(0e831d67-7b45-475a-b6dc-2e33a784f550)/$value

login = 's5pguest'

password = 's5pguest'

# Setting up payload for auth

headers = {

'user-agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/89.0.'

'4'

'389.128 Safari/537.36'}

payload = {"login_username": 's5pguest',

"login_password": 's5pguest'}

# Downloading file auth request

with Session() as s:

s.post('https://s5phub.copernicus.eu/dhus////login', data=payload, auth=HTTPBasicAuth(login, password),

headers=headers, proxies=proxies)

r = s.get(url, headers=headers, proxies=proxies)

# r = s.get(url, headers=headers)

filname = r.headers['Content-Disposition'][17:-1]

with open(output_path + filname, 'wb') as f:

for chunk in r.iter_content(chunk_size=1024):

if chunk: # filter out keep-alive new chunks

f.write(chunk)

print('Downloading...')

if __name__ == '__main__':

time_start = time.time()

boundary = r'POLYGON((85.95280241129068%2043.18650535330309,119.36302793328738%2042.13659362743459,125.07417759516719%2035.46913658316771,119.07747045019343%2020.9348221528751,108.79740105880981%2020.13258590671309,83.0972275803508%2034.297939612206335,85.95280241129068%2043.18650535330309,85.95280241129068%2043.18650535330309))'

uuid_list = s5_quarry(ingestion_date_FROM='2022-11-20', ingestion_date_TO='2022-11-30',wkt=boundary, product_type=NITROGEN_DIOXIDE,full_response = False)

outpath = r'./'

print('ready download')

for i in range(len(uuid_list)):

uuid = uuid_list[i]

print('download', uuid)

download_product(uuid, outpath)

print('done')

time_end = time.time()

print(time_end- time_start)

a =1s5_quarry函数进行数据查询,查询语句如下:

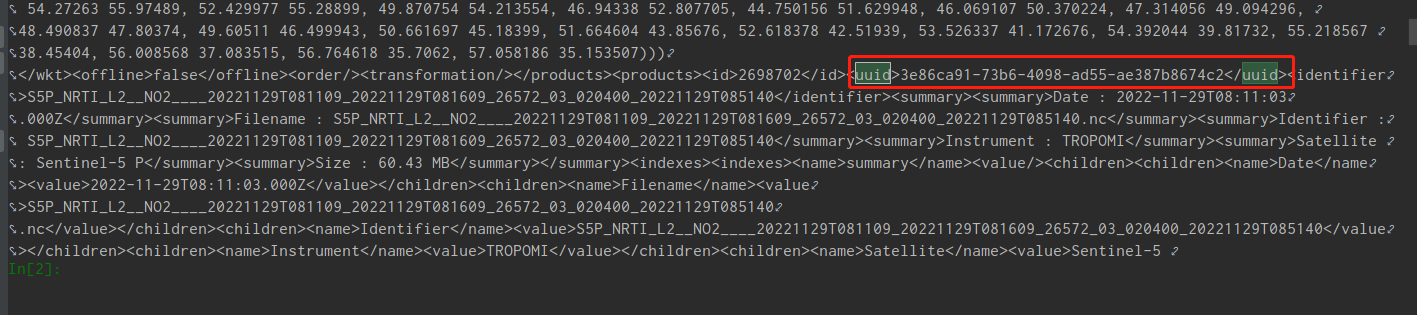

https://s5phub.copernicus.eu/dhus/api/stub/products?filter=(%20footprint:%22Intersects(POLYGON((85.95280241129068%2043.18650535330309,119.36302793328738%2042.13659362743459,125.07417759516719%2035.46913658316771,119.07747045019343%2020.9348221528751,108.79740105880981%2020.13258590671309,83.0972275803508%2034.297939612206335,85.95280241129068%2043.18650535330309,85.95280241129068%2043.18650535330309)))%22)%20AND%20(%20beginPosition:[2021-07-28T00:00:00.000Z%20TO%202021-07-28T23:59:59.999Z]%20AND%20endPosition:[2021-07-28T00:00:00.000Z%20TO%202021-07-28T23:59:59.999Z]%20)%20AND%20(%20%20(platformname:Sentinel-5%20AND%20producttype:L2__NO2___%20AND%20processinglevel:L2%20AND%20processingmode:Near%20real%20time))&offset=0&limit=25&sortedby=ingestiondate&order=desc返回数据如下:

这里需要把uuid提取出来,利用re正则式

pat_ = re.compile("<uuid>" + '(.*?)' + "</uuid>", re.S)

uuidList = pat_.findall(r.text)小结

boundary是指数据覆盖范围,这里的范围是东亚范围。

未完善,日期这里是写死的,范围也是写死的。后续要优化。

因为服务器在欧洲,使用request时要添加proxies,总体下载速度很慢。