lee滤波对于sar影像去噪非常有用

lee滤波对于sar影像去噪非常有用

ytkzlee滤波对于sar影像去噪非常有用。且lee滤波也很容易理解,且看以下python代码编写的lee滤波函数。

import numpy as np

from scipy.ndimage import uniform_filter

def lee_filter(img, size, global_variance):

"""

Apply Lee filter for speckle noise reduction using a global variance.

"""

img_mean = uniform_filter(img, (size, size))

img_sqr_mean = uniform_filter(img ** 2, (size, size))

img_variance = img_sqr_mean - img_mean ** 2

# Avoid division by zero or invalid operations

denominator = img_variance + global_variance

denominator[denominator == 0] = 1e-9 # Prevent division by zero

img_weights = img_variance / denominator

img_output = img_mean + img_weights * (img - img_mean)

return img_output但是在实际处理时,我们一般还要做一些处理,比如规定好输入、输出。一般来说,遥感影像是巨大的,所以我们也要考虑到硬件的性能。

以下是我所使用的改进的代码,仅供参考。

import numpy as np

from scipy.ndimage import uniform_filter

from osgeo import gdal

import sys

def translate(band, number=2):

"""

Convert the value of a band to the range 0-255 for visualization

2% linear conversion

"""

if np.all(np.isnan(band)) or not np.any(band):

return np.zeros_like(band, dtype=np.uint8)

max_val = np.nanmax(band)

min_val = np.nanmin(band)

if max_val == 0:

return np.zeros_like(band, dtype=np.uint8)

band = band.astype(np.float64)

band[band == -9999] = np.nan

band[band == 0] = np.nan

band_data = band / max_val * 255

band_data[np.isnan(band_data)] = 0

d2 = np.percentile(band_data, number)

u98 = np.percentile(band_data, 100 - number)

if u98 - d2 == 0:

return np.zeros_like(band, dtype=np.uint8)

maxout = 255

minout = 0

data_8bit_new = minout + ((band_data - d2) / (u98 - d2)) * (maxout - minout)

data_8bit_new[data_8bit_new < minout] = minout

data_8bit_new[data_8bit_new > maxout] = maxout

return data_8bit_new

def read_img(file):

"""

Read a Sentinel-1 GeoTIFF image and return its data, geotransform, projection, and GCPs.

"""

dataset = gdal.Open(file)

if dataset is None:

raise FileNotFoundError(f"Could not open {file}")

img_width = dataset.RasterXSize

img_height = dataset.RasterYSize

img_data = dataset.GetRasterBand(1).ReadAsArray(0, 0, img_width, img_height)

img_data = img_data.astype(float)

img_data[img_data == -9999] = np.nan

img_data[img_data == 0] = np.nan

geotransform = dataset.GetGeoTransform()

if geotransform is None:

raise ValueError("Input image has no geotransform information")

projection = dataset.GetProjection()

# Retrieve GCPs and GCP projection

gcps = dataset.GetGCPs()

gcp_projection = dataset.GetGCPProjection() if gcps else None

return img_data, dataset, geotransform, projection, gcps, gcp_projection

def lee_filter(img, size, global_variance):

"""

Apply Lee filter for speckle noise reduction using a global variance.

"""

img_mean = uniform_filter(img, (size, size))

img_sqr_mean = uniform_filter(img ** 2, (size, size))

img_variance = img_sqr_mean - img_mean ** 2

denominator = img_variance + global_variance

denominator[denominator == 0] = 1e-9

img_weights = img_variance / denominator

img_output = img_mean + img_weights * (img - img_mean)

return img_output

def process_block(full_img_data, block_y, block_x, block_height, block_width, window_size, global_variance):

"""

Process a single block with padding to handle edge effects correctly.

Expects full_img_data to be pre-filled with 0 instead of NaN.

"""

full_height, full_width = full_img_data.shape

pad_size = window_size // 2

y_start_padded = max(0, block_y - pad_size)

y_end_padded = min(full_height, block_y + block_height + pad_size)

x_start_padded = max(0, block_x - pad_size)

x_end_padded = min(full_width, block_x + block_width + pad_size)

padded_block = full_img_data[y_start_padded:y_end_padded, x_start_padded:x_end_padded]

filtered_padded_block = lee_filter(padded_block, window_size, global_variance)

y_start_crop = block_y - y_start_padded

y_end_crop = y_start_crop + block_height

x_start_crop = block_x - x_start_padded

x_end_crop = x_start_crop + block_width

return filtered_padded_block[y_start_crop:y_end_crop, x_start_crop:x_end_crop]

def block_processing(img_data, output_path, dataset, geotransform, projection, gcps, gcp_projection, block_size=512, window_size=7):

"""

Process the image in blocks, replacing NaNs with 0 for filtering and restoring them after.

Preserves Geo Points (geotransform), Coordinate System (projection), and GCPs in output.

"""

full_height, full_width = img_data.shape

driver = gdal.GetDriverByName('GTiff')

if driver is None:

raise ValueError("GDAL GTiff driver not available")

out_dataset = driver.Create(output_path, full_width, full_height, 1, gdal.GDT_Float32)

if out_dataset is None:

raise RuntimeError(f"Failed to create output file: {output_path}")

# Set geospatial metadata

out_dataset.SetGeoTransform(geotransform)

out_dataset.SetProjection(projection)

if gcps and gcp_projection:

print("Applying GCPs to output image...")

out_dataset.SetGCPs(gcps, gcp_projection)

out_band = out_dataset.GetRasterBand(1)

out_band.SetNoDataValue(np.nan)

print("Creating NaN mask...")

nan_mask = np.isnan(img_data)

print("Replacing NaN with 0 for processing...")

processing_data = np.nan_to_num(img_data, nan=0.0)

print("Calculating global image variance (from original data)...")

global_variance = np.nanvar(img_data)

if global_variance == 0:

global_variance = 1e-9

print(f"Global variance: {global_variance}")

print("Starting block processing...")

for y in range(0, full_height, block_size):

for x in range(0, full_width, block_size):

block_height = min(block_size, full_height - y)

block_width = min(block_size, full_width - x)

filtered_block = process_block(processing_data, y, x, block_height, block_width, window_size, global_variance)

mask_block = nan_mask[y:y + block_height, x:x + block_width]

filtered_block[mask_block] = np.nan

out_band.WriteArray(filtered_block, xoff=x, yoff=y)

print("Block processing finished.")

out_band.FlushCache()

out_dataset.FlushCache()

out_band = None

out_dataset = None

print(f"Filtered image saved to {output_path}")

if __name__ == '__main__':

# Update these paths to your local files

testfile = r'F:\360download\S1A_IW_GRDH_1SDV_20180810T103317_20180810T103346_023183_0284CB_E8D4\s1a-iw-grd-vv-20180810t103317-20180810t103346-023183-0284cb-001.tiff'

outputfile = r'D:\lee_filtered_s1a-iw-grd-vv-20180810t103317-20180810t103346-023183-0284cb-003.tif'

try:

print(f"Reading input file: {testfile}")

img_data, dataset, geotransform, projection, gcps, gcp_projection = read_img(testfile)

print(f"Geotransform: {geotransform}")

print(f"Projection: {projection}")

if gcps:

print(f"Found {len(gcps)} GCPs in input image.")

else:

print("No GCPs found in input image.")

block_processing(img_data, outputfile, dataset, geotransform, projection, gcps, gcp_projection, block_size=512, window_size=7)

except FileNotFoundError as e:

print(f"Error: {e}. Please ensure the input file path is correct.")

except Exception as e:

print(f"An unexpected error occurred: {e}")

finally:

dataset = None结果对比

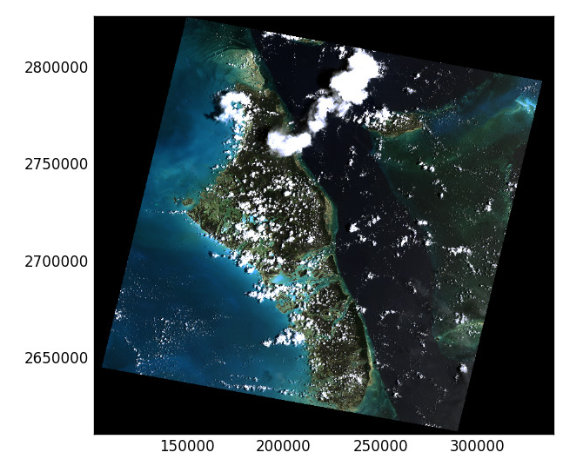

我们使用一景GF3影像做测试,去噪前:

去噪后:

局部放大对比,去噪前:

哨兵1影像的去噪前后对比

去噪前

去噪后

局部放大对比,去噪前:

局部放大对比,去噪后:

再放大对比,去噪前: